Back to all members...

Neil Band

MSc by Research, started 2020

Neil is an MSc by Research student in the Oxford Applied and Theoretical Machine Learning Group (OATML) under the supervision of Yarin Gal. He is interested in Bayesian and graph deep learning with applications in computational biology and autonomy. He has worked on machine learning methods for the inference of Alzheimer’s Disease progression with Manolis Kellis in the MIT Computational Biology Group, and on neural network memory optimization with Stratos Idreos in the Harvard Data Systems Lab. Neil obtained his undergraduate degree in computer science and economics at Harvard College, and is a Rhodes Scholar.

News items mentioning Neil Band • Publications while at OATML • Reproducibility and Code • Blog Posts

News items mentioning Neil Band:

NeurIPS 2021

11 Oct 2021

Thirteen papers with OATML members accepted to NeurIPS 2021 main conference. More information in our blog post.

NeurIPS 2021 Workshop and Challenges

30 Aug 2021

We’re co-organising the Bayesian Deep Learning Workshop at NeurIPS 2021 as well as two challenges: Approximate Inference in Bayesian Deep Learning and Shifts Challenge: Robustness and Uncertainty under Real-World Distributional Shift. This effort is led by Professor Yarin Gal, Neil Band, Sebastian Farquhar, and collaborators.

OATML researchers to present at Stanford University Lecture Course CS25: Transformers United

22 Aug 2021

OATML graduate students Aidan Gomez, Jannik Kossen, and Neil Band will be presenting their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning that introduces Non-Parametric Transformers at the Stanford Lecture Course ‘CS25: Transformers United’ on November 1, 2021. Professor Yarin Gal, and OATML DPhil students Clare Lyle and Lewis Smith are co-authors on the paper.

The talk will be made available online.

OATML researchers to speak at Google Research

22 Aug 2021

OATML students Jannik Kossen and Neil Band will be presenting their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning at Google Research on September 14, 2021. Professor Yarin Gal, and OATML DPhil students Aidan Gomez, Clare Lyle and Lewis Smith are co-authors on the paper.

OATML researcher presents at AI Campus Berlin

06 Aug 2021

OATML DPhil student Jannik Kossen gives invited talks at AI Campus Berlin on two recent papers: Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning and Active Testing: Sample-Efficient Model Evaluation. Recordings of are available upon request. Announcements are here and here. Professor Yarin Gal, Dr Tom Rainforth, and OATML DPhil students Sebastian Farquhar, Aidan Gomez, Clare Lyle and Lewis Smith are co-authors on the papers.

OATML researchers to speak at Cohere

09 Jul 2021

OATML students Jannik Kossen and Neil Band present their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning at Cohere on July 9, 2021. Professor Yarin Gal, and OATML DPhil students Aidan Gomez, Clare Lyle and Lewis Smith are also co-authors on the paper.

Publications while at OATML:

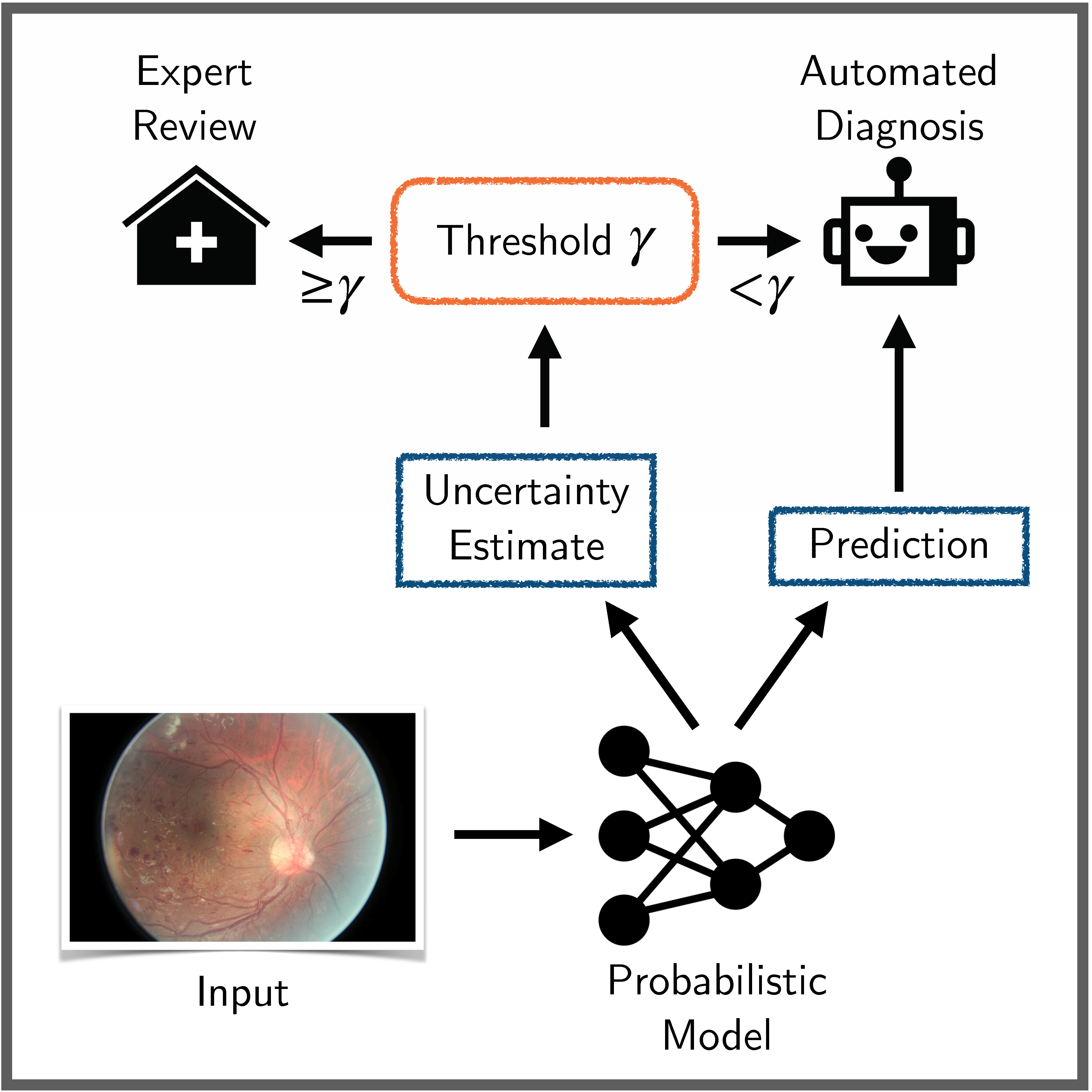

Benchmarking Bayesian Deep Learning on Diabetic Retinopathy Detection Tasks

Bayesian deep learning seeks to equip deep neural networks with the ability to precisely quantify their predictive uncertainty, and has promised to make deep learning more reliable for safety-critical real-world applications. Yet, existing Bayesian deep learning methods fall short of this promise; new methods continue to be evaluated on unrealistic test beds that do not reflect the complexities of downstream real-world tasks that would benefit most from reliable uncertainty quantification. We propose a set of real-world tasks that accurately reflect such complexities and are designed to assess the reliability of predictive models in safety-critical scenarios. Specifically, we curate two publicly available datasets of high-resolution human retina images exhibiting varying degrees of diabetic retinopathy, a medical condition that can lead to blindness, and use them to design a suite of automated diagnosis tasks that require reliable predictive uncertainty quantification. We use these... [full abstract]

Neil Band, Tim G. J. Rudner, Qixuan Feng, Angelos Filos, Zachary Nado, Michael W. Dusenberry, Ghassen Jerfel, Dustin Tran, Yarin Gal

NeurIPS Datasets and Benchmarks Track, 2021

Spotlight Talk, NeurIPS Workshop on Distribution Shifts, 2021

Symposium on Machine Learning for Health (ML4H) Extended Abstract Track, 2021

NeurIPS Workshop on Bayesian Deep Learning, 2021

[OpenReview] [Code] [BibTex]

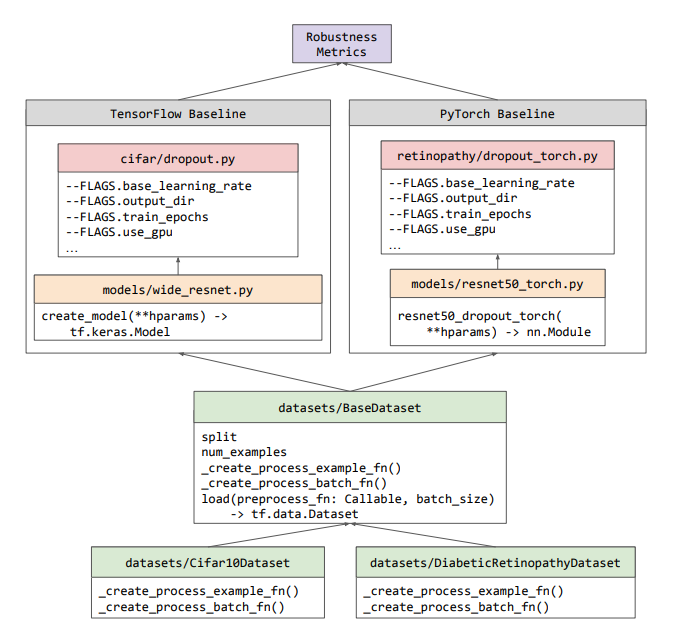

Uncertainty Baselines: Benchmarks for Uncertainty & Robustness in Deep Learning

High-quality estimates of uncertainty and robustness are crucial for numerous real-world applications, especially for deep learning which underlies many deployed ML systems. The ability to compare techniques for improving these estimates is therefore very important for research and practice alike. Yet, competitive comparisons of methods are often lacking due to a range of reasons, including: compute availability for extensive tuning, incorporation of sufficiently many baselines, and concrete documentation for reproducibility. In this paper we introduce Uncertainty Baselines: high-quality implementations of standard and state-of-the-art deep learning methods on a variety of tasks. As of this writing, the collection spans 19 methods across 9 tasks, each with at least 5 metrics. Each baseline is a self-contained experiment pipeline with easily reusable and extendable components. Our goal is to provide immediate starting points for experimentation with new methods or applications. Addi... [full abstract]

Zachary Nado, Neil Band, Mark Collier, Josip Djolonga, Michael W. Dusenberry, Sebastian Farquhar, Angelos Filos, Marton Havasi, Rodolphe Jenatton, Ghassen Jerfel, Jeremiah Liu, Zelda Mariet, Jeremy Nixon, Shreyas Padhy, Jie Ren, Tim G. J. Rudner, Yeming Wen, Florian Wenzel, Kevin Murphy, D. Sculley, Balaji Lakshminarayanan, Jasper Snoek, Yarin Gal, Dustin Tran

NeurIPS Workshop on Bayesian Deep Learning, 2021

[arXiv] [Code] [Blog Post (Google AI)] [BibTex]

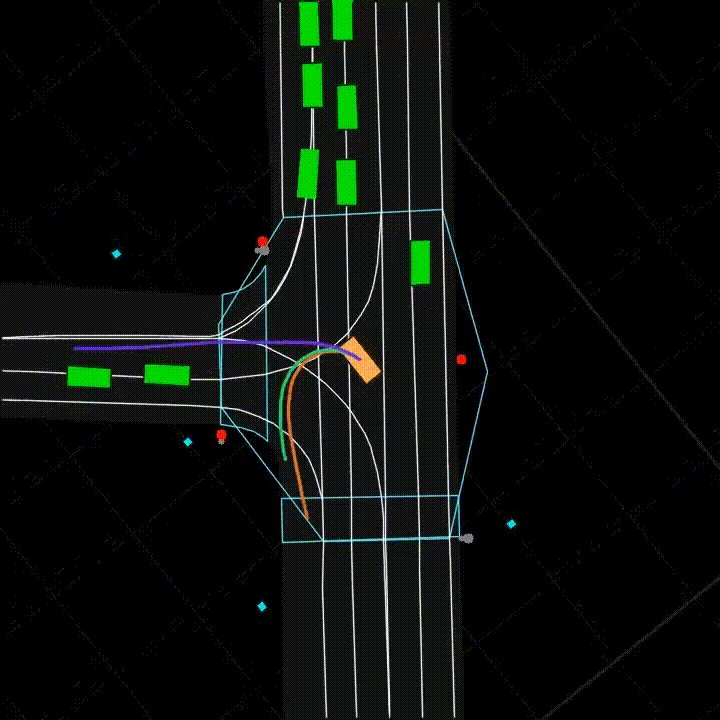

Shifts: A Dataset of Real Distributional Shift Across Multiple Large-Scale Tasks

There has been significant research done on developing methods for improving robustness to distributional shift and uncertainty estimation. In contrast, only limited work has examined developing standard datasets and benchmarks for assessing these approaches. Additionally, most work on uncertainty estimation and robustness has developed new techniques based on small-scale regression or image classification tasks. However, many tasks of practical interest have different modalities, such as tabular data, audio, text, or sensor data, which offer significant challenges involving regression and discrete or continuous structured prediction. Thus, given the current state of the field, a standardized large-scale dataset of tasks across a range of modalities affected by distributional shifts is necessary. This will enable researchers to meaningfully evaluate the plethora of recently developed uncertainty quantification methods, as well as assessment criteria and state-of-the-art baselines.... [full abstract]

Andrey Malinin, Neil Band, Alexander Ganshin, German Chesnokov, Yarin Gal, Mark J. F. Gales, Alexey Noskov, Andrey Ploskonosov, Liudmila Prokhorenkova, Ivan Provilkov, Vatsal Raina, Vyas Raina, Denis Roginskiy, Mariya Shmatova, Panagiotis Tigas, Boris Yangel

NeurIPS Datasets and Benchmarks Track, 2021

[arXiv] [BibTex] [Code]

[Competition Website] [Blog Post (OATML)] [Blog Post (Yandex Research)]

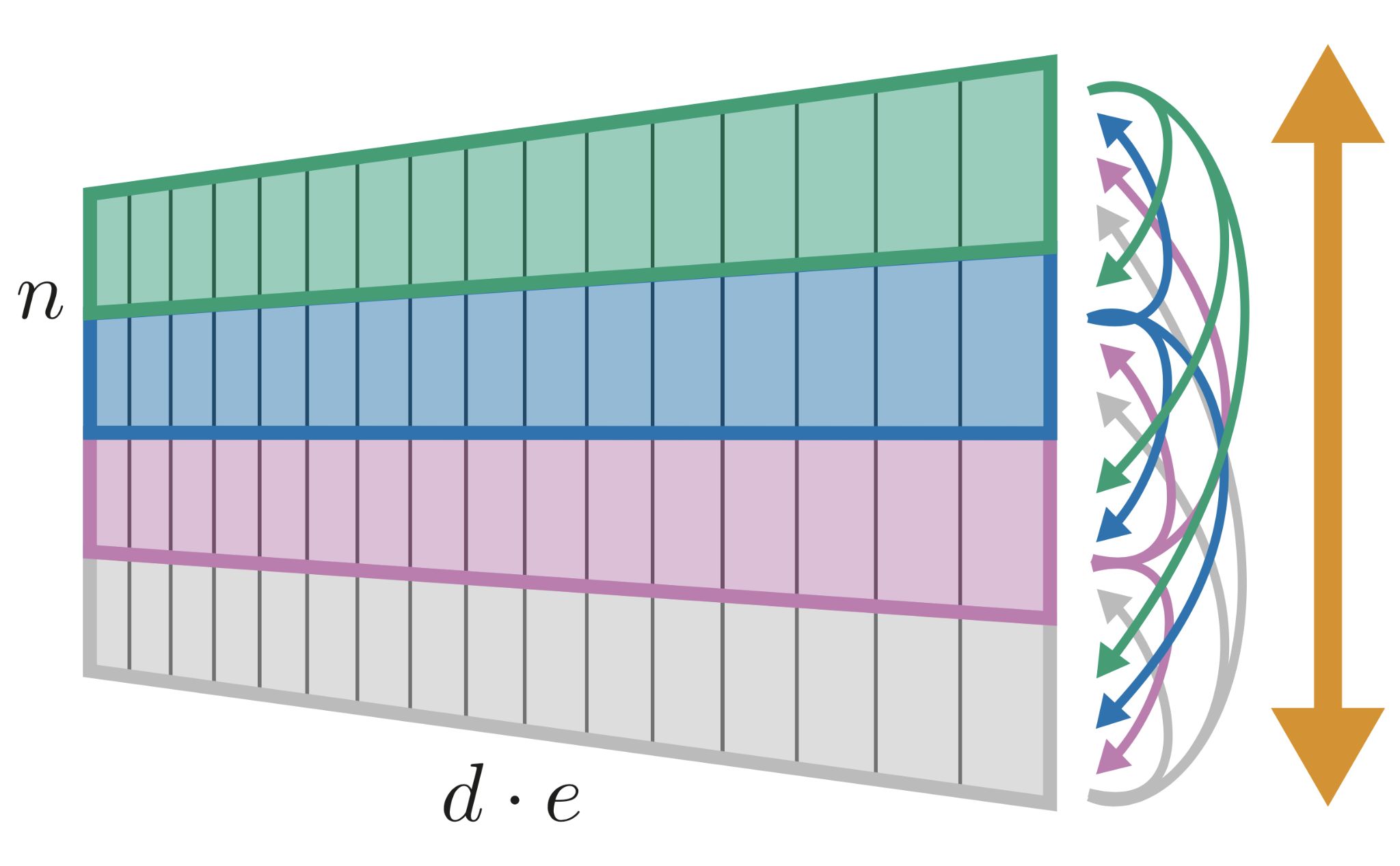

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time. Our approach uses self-attention to reason about relationships between datapoints explicitly, which can be seen as realizing non-parametric models using parametric attention mechanisms. However, unlike conventional non-parametric models, we let the model learn end-to-end from the data how to make use of other datapoints for prediction. Empirically, our models solve cross-datapoint lookup and complex reasoning tasks unsolvable by traditional deep learning models. We show highly competitive results on tabular data, early results on CIFAR-10, and give insight into how the model makes use of the interactions between points.

Jannik Kossen, Neil Band, Clare Lyle, Aidan Gomez, Yarin Gal, Tom Rainforth

NeurIPS, 2021

[arXiv] [Code]

Blog Posts

13 OATML Conference papers at NeurIPS 2021

OATML group members and collaborators are proud to present 13 papers at NeurIPS 2021 main conference. …

Full post...Jannik Kossen, Neil Band, Aidan Gomez, Clare Lyle, Tim G. J. Rudner, Yarin Gal, Binxin (Robin) Ru, Clare Lyle, Lisa Schut, Atılım Güneş Baydin, Tim G. J. Rudner, Andrew Jesson, Panagiotis Tigas, Joost van Amersfoort, Andreas Kirsch, Pascal Notin, Angelos Filos, 11 Oct 2021

Introducing the Shifts Challenge

We have released the Shifts benchmark for robustness and uncertainty quantification, along with our accompanying NeurIPS 2021 Challenge! We believe that Shifts, which includes the largest vehicle motion prediction dataset to date, will become the standard large-scale evaluation suite for uncertainty and robustness in machine learning. …

Full post...Neil Band, Andrey Malinin, Panagiotis Tigas, Yarin Gal, 06 Aug 2021